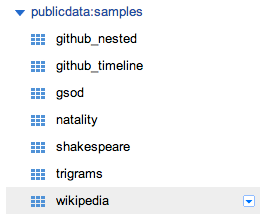

This week on Google developers live Israel we wanted to show the power of Big Query. What is Big Query? Well, in todays world when everyone like to use the term “big data” you need to have the capabilities to querying massive datasets. This can be time consuming and expensive without the right knowledge, hardware and infrastructure. Google BigQuery solves this problem by enabling super-fast, SQL-like queries against append-only tables, using the processing power of Google’s infrastructure. In order to get started quickly and ‘test the water’ there is a powerful online tool that let you query pre-existing datasets like: wikipedia, Github etc’. If you like to type in command line, there is also a command line tool. Before you start your first project you should signup for BigQuery (yes! it’s open now for all). You should log in to the Google APIs Console and make sure you set a new project and allow Big Query API on it. You should also, enable billing if you have not done so in the past. Lastly, head to bigquery.cloud.google.com and click on one of the public datasets that are on the left sidebar.

This week on Google developers live Israel we wanted to show the power of Big Query. What is Big Query? Well, in todays world when everyone like to use the term “big data” you need to have the capabilities to querying massive datasets. This can be time consuming and expensive without the right knowledge, hardware and infrastructure. Google BigQuery solves this problem by enabling super-fast, SQL-like queries against append-only tables, using the processing power of Google’s infrastructure. In order to get started quickly and ‘test the water’ there is a powerful online tool that let you query pre-existing datasets like: wikipedia, Github etc’. If you like to type in command line, there is also a command line tool. Before you start your first project you should signup for BigQuery (yes! it’s open now for all). You should log in to the Google APIs Console and make sure you set a new project and allow Big Query API on it. You should also, enable billing if you have not done so in the past. Lastly, head to bigquery.cloud.google.com and click on one of the public datasets that are on the left sidebar.

After you select a source – feel free to test it with the browser tool and see how the data looks like. For example: If you wish to see what are the top 10 revised articles on the english version of wikipedia type:

SELECT TOP(title, 10) as title, COUNT(*) as revision_count FROM [publicdata:samples.wikipedia] WHERE wp_namespace = 0;

These are the results I got on 24/7/2013:

| Row | title | revision_count |

| 1 | George W. Bush | 43,652 |

| 2 | List of World Wrestling Entertainment employees | 30,572 |

| 3 | Wikipedia | 29,726 |

| 4 | United States | 27,433 |

| 5 | Michael Jackson | 23,245 |

| 6 | Jesus | 21,767 |

| 7 | Deaths in 2009 | 20,695 |

| 8 | World War II | 20,522 |

| 9 | Britney Spears | 20,459 |

| 10 | Wii | 20,199 |

HttpArchive is now part of Big Query

I’ve been helping this wonderful open-source project in the past and I passion about the information its contain and the way it allow developers to access it. The site provides a number interesting stats and trends, but the data on the site only scratches the surface. Since the data (which you can download) is now around 400GB, it won’t be a ‘walk in the park’ to export-import it to your own database. Thanks to big query and Ilya Grigorik we have now the option to ask questions on this dataset and get results quickly. Moreover, with big query API and App Script code we can see trends and have queries that are more complicated. In order to have a pointer to the data and to work with it, you should click down the arrow beside “API Project”: Switch to project -> Display project -> enter “httparchive”. Next step, open a new google sheet and go to ‘Tools’ -> ‘Script Editor’. You can work with this example code that will give you a start for working with big query.

Ready?

Google Developer Live Israel

Got interesting questions for httparchive?

There are many options to answer you questions. The powerful aspect of Apps Script, is in the automation. We can run a job and later get the results in our google sheet. So we have a nice way to share these results with co-workers, friends etc’. We can take it a step forward, and have a customize trigger that will run our ‘dashboard’ on a daily bases, crunch the numbers and email us the results. Few examples for interesting queries on http archive:

- How fast is G+ / FB / Twitter over time?

- What are the top 10 sites that are being used by others?

- What is the most popular JS framework?

- Which site from the top 100 is the slower? Does it correlate with its size?

- What is the correlation between JS, CSS sizes and the site speed?

- What is the % of error pages on the top 25 fastest sites in the world?

Code Example(s)

| function runQueryThirdPartyUsage(startRow, startCol) { | |

| if (startRow === undefined) startRow = 25; | |

| if (startCol === undefined) startCol = 1; | |

| var projectNumber = 'bq-httparchive-1'; | |

| var sheet = SpreadsheetApp.getActiveSheet(); | |

| var dates = ['2013_06','2013_05','2013_04','2013_03','2013_02','2013_01', | |

| '2012_12','2012_11','2012_10','2012_09','2012_08','2012_07', | |

| '2012_06','2012_05','2012_04','2012_03','2012_02','2012_01']; | |

| var sql = 'SELECT date, third_party, num_requests FROM '; | |

| for (var i=0; i<dates.length; i++) { | |

| sql += '(SELECT "'+dates[i]+'" date, DOMAIN(req.url) third_party, COUNT(*) num_requests FROM [httparchive:runs.'+dates[i]+'_01_requests] as req JOIN ( \ | |

| SELECT DOMAIN(url) self, pageid \ | |

| FROM [httparchive:runs.'+dates[i]+'_01_pages] \ | |

| ) as pages ON pages.pageid = req.pageid \ | |

| WHERE DOMAIN(req.url) != pages.self \ | |

| GROUP BY third_party ORDER BY num_requests desc LIMIT 10),' | |

| } | |

| var queryResults; | |

| // Inserts a Query Job | |

| try { | |

| Logger.log("Our SQL: " + sql); | |

| queryResults = BigQuery.Jobs.query(projectNumber, sql); | |

| } | |

| catch (err) { | |

| Logger.log(err); | |

| Browser.msgBox(err); | |

| return; | |

| } | |

| // Check on status of the Query Job | |

| while (queryResults.getJobComplete() == false) { | |

| try { | |

| queryResults = BigQuery.Jobs.getQueryResults(projectNumber, queryJob.getJobReference().getJobId()); | |

| } | |

| catch (err) { | |

| Logger.log(err); | |

| Browser.msgBox(err); | |

| return; | |

| } | |

| } | |

| // Update the amount of results | |

| var resultCount = queryResults.getTotalRows(); | |

| var resultSchema = queryResults.getSchema(); | |

| var resultValues = new Array(resultCount); | |

| var tableRows = queryResults.getRows(); | |

| // Iterate through query results | |

| for (var i = 0; i < tableRows.length; i++) { | |

| var cols = tableRows[i].getF(); | |

| resultValues[i] = new Array(cols.length); | |

| // For each column, add values to the result array | |

| for (var j = 0; j < cols.length; j++) { | |

| resultValues[i][j] = cols[j].getV(); | |

| } | |

| } | |

| // Update the Spreadsheet with data from the resultValues array, starting from cell A1 | |

| sheet.getRange(startRow, startCol, resultCount, tableRows[0].getF().length).setValues(resultValues); | |

| Browser.msgBox("Done! All the answers should be on the sheet"); | |

| } |

| SELECT pages.pageid, url, cnt, libs, pages.rank rank FROM [httparchive:runs.2013_06_01_pages] as pages JOIN ( | |

| SELECT pageid, count(distinct(type)) cnt, GROUP_CONCAT(type) libs FROM ( | |

| SELECT REGEXP_EXTRACT(url, r'(dojo|angular|prototype|backbone|emberjs|sencha|scriptaculous).*\.js') type, pageid | |

| FROM [httparchive:runs.2013_06_01_requests] | |

| WHERE REGEXP_MATCH(url, r'dojo|angular|prototype|backbone|emberjs|sencha|scriptaculous.*\.js') | |

| GROUP BY pageid, type | |

| ) | |

| GROUP BY pageid | |

| HAVING cnt >= 2 | |

| ) as lib ON lib.pageid = pages.pageid | |

| WHERE rank IS NOT NULL | |

| ORDER BY rank asc |

| SELECT DOMAIN(req.url) third_party, COUNT(*) num_requests | |

| FROM [httparchive:runs.2013_06_01_requests] as req JOIN ( | |

| SELECT DOMAIN(url) self, pageid | |

| FROM [httparchive:runs.2013_06_01_pages] | |

| ) as pages ON pages.pageid = req.pageid | |

| WHERE DOMAIN(req.url) != pages.self | |

| GROUP BY third_party | |

| ORDER BY num_requests desc LIMIT 10; |

Resources

- Big Query starting page on developers.google.com

- App Script code to work with Big Query

- Ilya’s post on http archive and big query

Excelente. Muchas gracias por los aportes.

Thank you!

YEAH!

Pingback: Big Query And App Script (GDL Israel) - TI Nacional